Shakti Bare Metal

Your AI shouldn’t compete for resources. With Shakti Bare Metal , it never will.

Shakti Bare Metal delivers dedicated GPU servers with NVIDIA HGX H100 (8 GPUs for ultra-fast training) and L40S nodes for efficient inference. With no oversubscription or virtualization, it ensures predictable, high-performance AI for enterprises and startups

Built with the Best

Train trillion-parameter models faster on dedicated,

uncompromised Shakti Bare Metal.

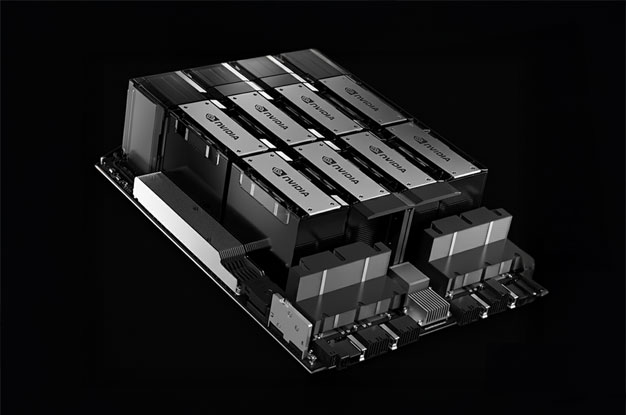

HGX H100 SXM5 is purpose-built for training trillion-parameter models, multimodal AI, and enterprise-scale generative AI applications.

L40S GPU nodes deliver ultra-low latency for conversational AI, recommendation systems, fraud detection, and personalized search engines.

Run weather forecasting, genomics, computational fluid dynamics, and seismic imaging workloads with near-linear scalability.

Enable streaming analytics, predictive maintenance, and anomaly detection by processing petabyte-scale datasets in real time.

Accelerate training and validation for self-driving cars, robotics, and edge AI systems with massive parallel computers.

Bare Metal Advantage

Peak AI Performance

with Shakti Bare Metal Precision

Dedicated Single-Tenant Infrastructure

No noisy neighbors or shared resources; complete isolation for performance-sensitive AI workloads.

Maximum GPU Performance

Direct access to NVIDIA HGX H100 (8x SXM5 with NVLink + NVSwitch) and L40S GPUs, with no virtualisation overhead.

Ultra-Fast Interconnects

900 GB/s GPU-to-GPU bandwidth via NVLink 4.0/NVSwitch and InfiniBand Quantum-2 (NDR, up to 32 Tbps) for seamless multi-node scaling.

Optimized for AI Training & Inference

H100 for training foundation models, LLMs, and generative AI. L40S for large-scale inference, multi-workload processing, and energy-efficient AI ops.

Scalable & Flexible Configurations

Start with a single node and scale to clusters, tailored for enterprise, research, and startup workloads.

High-Performance Storage Integration

Designed to pair with WEKA HSS parallel file system for high-throughput, low-latency data access at scale.

Supports HPC & Advanced Simulations

Optimized for emerging workloads like autonomous systems, multimodal AI, digital twins, and metaverse-scale 3D simulations.

Sovereign AI Advantage

Hosted in India, ensuring compliance, data sovereignty, and a secure AI infrastructure platform.

Peak Performance

Unveiling the Secrets of

High- Performance Architecture

- Dedicated NVIDIA HGX H100 & L40S GPUs

- Fourth-Generation NVLink 4.0 & NVSwitch Interconnects

- InfiniBand Quantum-2 NDR 400G

- Spectrum-4 400GbE Ethernet Networking

- NVIDIA BlueField-3 DPUs

- NVMe High-Speed Storage & SSD-Backed Object Storage

- Full NVIDIA AI Enterprise Stack Integration

Dedicated NVIDIA HGX H100 & L40S GPUs

Bare-metal access to the latest GPU architectures for training, inference, and visualization.

Fourth-Generation NVLink 4.0 & NVSwitch Interconnects

Up to 900 GB/s GPU-to-GPU bandwidth, ensuring faster model parallelism and reduced communication bottlenecks.

InfiniBand Quantum-2 NDR 400G

Industry-leading low latency, adaptive routing, and congestion control for large-scale AI and HPC clusters.

Spectrum-4 400GbE Ethernet Networking

Flexible high-throughput connectivity for multi-tenant or hybrid workloads.

NVIDIA BlueField-3 DPUs

Offloads networking, storage, security, and infrastructure management from CPUs, delivering faster and more secure performance.

NVMe High-Speed Storage & SSD-Backed Object Storage

Accelerated data access for training datasets, inference pipelines, and high-speed checkpoints.

Full NVIDIA AI Enterprise Stack Integration

End-to-end AI software support including CUDA, TensorRT, RAPIDS, and frameworks like PyTorch/TensorFlow.

Why Shakti Cloud Works for You

Flexible Plans for Every AI Ambition

- Monthly Price

- 6 Months

- 1 Year

- 2 Year

|

GPU Type

|

vCPUs

|

Dedicated RAM

|

Data Drive

|

Interconnect

|

Networking |

*Monthly Price

(Per GPU Per Hour) |

|---|---|---|---|---|---|---|

|

8 x HGX H100 (640 GB GPU Memory)

|

224 | 2 TB | 61.44 TB | NVLink, NVSwitch | InfiniBand | ₹ 332 |

| 4 x L40S (192 GB GPU Memory) | 128 | 1 TB | 7.68 TB | PCIe | Ethernet | ₹ 186 |

| 8 x HGX B200 | 112 | 2 TB | 30.4 TB | NVLink, NVSwitch | InfiniBand | ₹ 503 |

| ** Data Transfer: Free Ingress & Engress (For all Bare Metal Plans)

** DPU: NVIDIA BlueField-3 (For all Bare Metal) |

||||||

|

GPU Type

|

vCPUs

|

Dedicated RAM

|

Data Drive

|

Interconnect

|

Networking |

*6 Month Price

(Per GPU Per Hour) |

|---|---|---|---|---|---|---|

|

8 x HGX H100 (640 GB GPU Memory)

|

224 | 2 TB | 61.44 TB | NVLink, NVSwitch | InfiniBand | ₹ 320 |

| 4 x L40S (192 GB GPU Memory) | 128 | 1 TB | 7.68 TB | PCIe | Ethernet | ₹ 165 |

| 8 x HGX B200 | 112 | 2 TB | 30.4 TB | NVLink, NVSwitch | InfiniBand | ₹ 477 |

| ** Data Transfer: Free Ingress & Engress (For all Bare Metal Plans)

** DPU: NVIDIA BlueField-3 (For all Bare Metal) |

||||||

|

GPU Type

|

vCPUs

|

Dedicated RAM

|

Data Drive

|

Interconnect

|

Networking |

*1 Year Price

(Per GPU Per Hour) |

|---|---|---|---|---|---|---|

|

8 x HGX H100 (640 GB GPU Memory)

|

224 | 2 TB | 61.44 TB | NVLink, NVSwitch | InfiniBand | ₹ 249 |

| 4 x L40S (192 GB GPU Memory) | 128 | 1 TB | 7.68 TB | PCIe | Ethernet | ₹ 150 |

| 8 x HGX B200 | 112 | 2 TB | 30.4 TB | NVLink, NVSwitch | InfiniBand | ₹ 449 |

| ** Data Transfer: Free Ingress & Engress (For all Bare Metal Plans)

** DPU: NVIDIA BlueField-3 (For all Bare Metal) |

||||||

|

GPU Type

|

vCPUs

|

Dedicated RAM

|

Data Drive

|

Interconnect

|

Networking |

*2 Years Price

(Per GPU Per Hour) |

|---|---|---|---|---|---|---|

|

8 x HGX H100 (640 GB GPU Memory)

|

224 | 2 TB | 61.44 TB | NVLink, NVSwitch | InfiniBand | ₹ 239 |

| 4 x L40S (192 GB GPU Memory) | 128 | 1 TB | 7.68 TB | PCIe | Ethernet | ₹ 148 |

| 8 x HGX B200 | 112 | 2 TB | 30.4 TB | NVLink, NVSwitch | InfiniBand | ₹ 359 |

| ** Data Transfer: Free Ingress & Engress (For all Bare Metal Plans)

** DPU: NVIDIA BlueField-3 (For all Bare Metal) |

||||||