Shakti Fine-Tuning

From Prototype to Production 15× Faster.

Our platform puts leading AI models like Llama and Qwen directly in your hands, allowing fine-tuning with SFT, GRPO, DPO, RFT, LoRA, or QLoRA to meet your exact needs. Whether it’s text, speech, or images, our distributed compute infrastructure ensures training is fast, efficient, and flexible. Teams can experiment, innovate, and deploy without hardware constraints, combining training and deployment in a single full-lifecycle platform. This is the fastest path from model to market, unlocking value from AI investments quickly.

Built with the Best

Smarter Solutions for Every Industry.

Create chatbots that understand your business and users, handle complex queries, maintain multi-turn conversations, and deliver intelligent, context-aware interactions, automating customer support while ensuring consistent, seamless engagement.

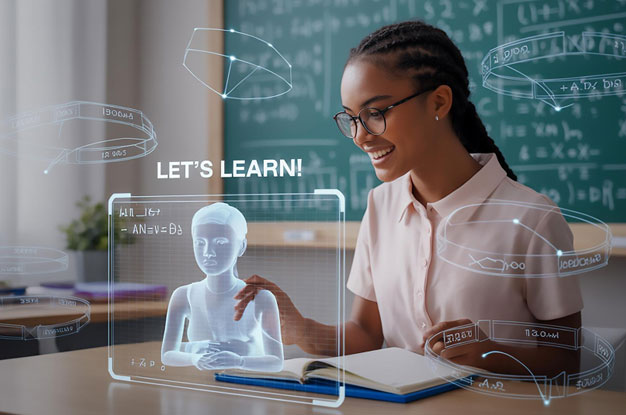

Create AI tutors that personalise learning journeys for students and professionals. By analysing user progress, learning style, and knowledge gaps, these AI tutors can recommend tailored content, adapt difficulty levels dynamically, and provide instant feedback.

Deploy virtual assistants capable of handling queries with high accuracy and speed. These assistants can resolve routine issues independently, escalate complex cases intelligently, and provide 24/7 support.

Fine-Tuning Advantage

End-to-End Capabilities for Advanced Model Operations

Advanced Model Support

Train and fine-tune the latest models like Llama, Qwen, and more.

Flexible Deployment

– Choose between shared endpoints (cost-efficient with throttling) or dedicated endpoints (guaranteed throughput for mission-critical applications).

Seamless Integration

Deploy endpoints in minutes, integrating AI into applications without infrastructure overhead.

Elastic Scaling

<500 ms autoscaling ensures instant and seamless elasticity.

Real-Time Monitoring

Dashboards track usage, performance, and infrastructure costs.

Optimisation Tools

Tune dynamically for latency, throughput, or operational cost as priorities shift.

Enterprise-Ready Security

SLAs, compliance, and continuous monitoring built-in.

Peak Performance

Engineered for Scalable,

High-Throughput AI Workloads

- Advanced Model Support

- Flexible Deployment

- Seamless Integration

- Elastic Scaling

- Real-Time Monitoring

- Optimization Tools

- Enterprise-Ready Security

Advanced Model Support

Shared Endpoints provide access to a curated catalog of state-of-the-art models including LLMs, Vision AI, and Speech (ASR/TTS). Users can experiment with multiple model/ MoE families to identify the best fit for their use case.

Flexible Deployment

Deploy pre-trained models instantly or connect via APIs without infrastructure setup. Shared Endpoints eliminate provisioning delays and offer immediate access to enterprise-ready inference.

Seamless Integration

OpenAI-compatible APIs and SDKs ensure drop-in integration with applications, workflows, and third-party tools. Supports REST, CLI, and portal-based access for developers.

Elastic Scaling

Automatically adjusts GPU resources based on incoming requests. Rate limits (RPM, TPM, or audio/image caps) maintain fairness across tenants, while ensuring consistent performance under variable workloads.

Real-Time Monitoring

Customers gain access to usage dashboards tracking tokens, latency, throughput, and error rates. Logs and analytics help identify bottlenecks during prototyping and scaling.

Optimization Tools

Models are served with optimizations for GPU utilization, quantization, and batching. Dedicated Endpoints reduce inference cost while delivering reliable performance across diverse workloads.

Enterprise-Ready Security

Built-in encryption, API authentication, and tenant isolation ensure secure experimentation. Shared Endpoints comply with enterprise data handling policies and can be safely adopted for pilots and POCs.